What is Technical SEO?

Technical SEO ensures that your website meets the technical requirements which modern search engines demand it. It comprises of following elements:

Essential elements of Technical SEO include

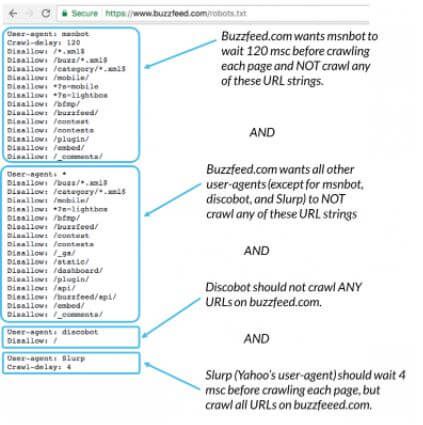

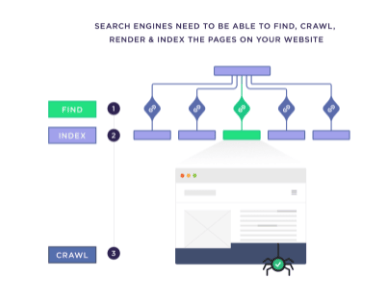

Crawling

Indexing

Rendering

Website architecture.

Why is Technical SEO Important?

Technical SEO helps you achieve the goal of better organic rankings. You have invested in overall SEO like great website design with the best content. But, your technical SEO is not in place. Sadly, you’re not going to rank.

What Does It Take to Rank?

Search engines such as Google and others must be able to find, crawl, render and index the pages on your website.

But this is just the tip of the iceberg. Even after Google indexes all of your site’s content, there is further work to do.

Things You Will Need To Do

To optimize your site for technical search engine parameters, the pages on your website must be secure, mobile-optimized, free of duplicate content, and fast-loading. Numerous factors go into a site’s technical optimization.

Though technical SEO is not a direct ranking factor per Google’s guidelines, it makes it easier for Google to access your content. This directly translates into giving you better chances for ranking.

How Can You Improve Your Technical SEO?

What comes to your mind when we say technical SEO-? Spiders, crawling, and indexing-isn’t it? But, the technical SEO is much wider. The parameters are vivid and have a wider scope.

Here are the critical factors you need to take into account for a Technical SEO Audit:

1. Page Canonicalization Issue

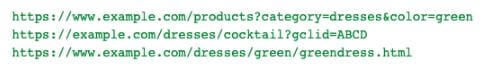

Canonical issues generally occur if a website has more than one URL with similar or identical content. It may be the consequence of the absence of proper redirects. However, canonical issues are also associated with search parameters present on e-commerce sites. They may occur due to syndicating or publishing content on several sites.

For example, a website might load its homepage for all of the following URLs:

What Are Some Common Causes of Canonical Issues?

Various scenarios lead to canonical issues, but Google lists the following as the commonest ones.

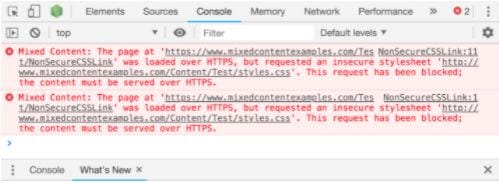

HTTPS vs. HTTP: When an SSL certificate secures your site, it may load both the secured and the non-secured versions of the website. It will result in creating duplicates of all the single pages of your website.

Google considers these as different URLs with the same content. Hence, duplicacy arises again.

URLs Generation Settings for Different Devices Used to View the Page: having disparate websites for desktop users [site].com, mobile users, AMP sites vs.] amp.[site].com, it can lead to canonical issues.

Though there are many ways to create canonical issues on your site, the good thing is you can track the issues and fix them.

2. Improper Canonical Tags

Does Your Site Have Canonical Issues?

As mentioned already, canonical issues may result from HTTP/HTTPS or WWW/non-WWW and others. To find if these issues are present on your site, type all the possible versions of your site’s URL into your browser. For instance:

If these URLs redirect to one particular URL in the set (for example, each of those SEO Experts Company India URLs redirects to https://www.seoexpertscompanyindia.com), It means your site does not have the canonical issues. However, if any URLs do not redirect to the preferred URL, the site has a canonical issue.

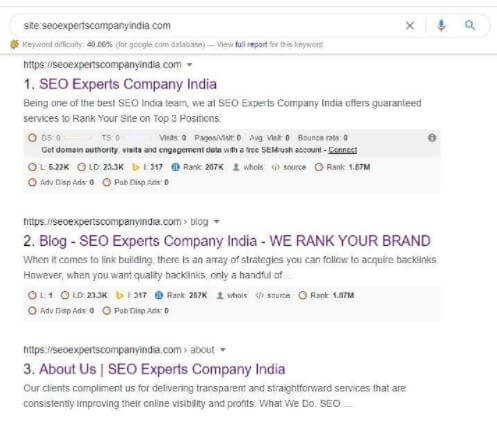

This sounds simple. Isn’t it? However, there can be other challenging and time-consuming issues present. But a relatively straightforward way to discover things that could surprise you. Google, typing in site:[yoursite.com]

Review all of the pages in Google’s index to check if there’s anything unusual.

Fixing the Common Canonical Issues

Typically, you can use two methods to fix canonical issues on your site

Implement a 301 redirect

Add canonical tags to your site’s pages. It tells Google the preferred page for similar-looking pages. Based on the issue you have to resolve, you need to choose the most suitable method.

Implementing Sitewide 301 Redirects for Duplicate Pages

This method is primarily used to resolves issues with HTTP/HTTPS and WWW/non-WWW

Implement a sitewide 301 redirect to the preferred URL version.

How to Set Up a Sitewide Redirect?

You can use several ways to set up a sitewide redirect. The most straightforward and least risky one involves setting up the redirect through the website’s host.

Start with searching Google for either “HTTP to HTTPS redirect [host name]” or “WWW to non-WWW redirect [host name].”

Find out if your host has a support page to explain the procedure to make the required change. Otherwise, contact and get help from your host’s support team.

If you are more resourceful and have developers to help you, ask them to set up your redirects. They may use:

.htaccess (Apache) redirects,

NGINX redirects,

With these changes, you will start noticing traffic and ranking changes. These changes are normal, but after a while, the site’s traffic and rankings will recover.

Add Canonical Tags to All the Pages of Your Site

This method is used for resolving the issues arising due to URLs changing according to user interactions (e.g., e-commerce sites)

In place of letting Google decide canonical pages for your duplicate pages, specify to the search engine which page you want to be considered canonical. Add a rel=canonical tag to all the site’s pages.

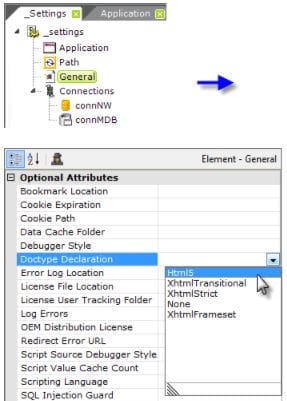

However, it can be highly inconvenient and impractical to add codes to every page of your site. The good thing is that various content management systems have several ways of making canonicalizing your site’s pages easier.

On WordPress sites, you can use the Yoast SEO Premium plugin to automate the addition of self-referential canonical tags to all your site’s pages.

HubSpot CMS allows the users to change their settings where CMS will automatically add self-referencing URLs.

Shopify will automatically add canonical tags to the site’s pages. That’s why site owners do not have to worry about the same.

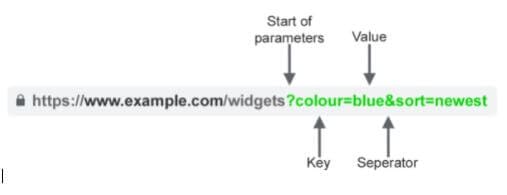

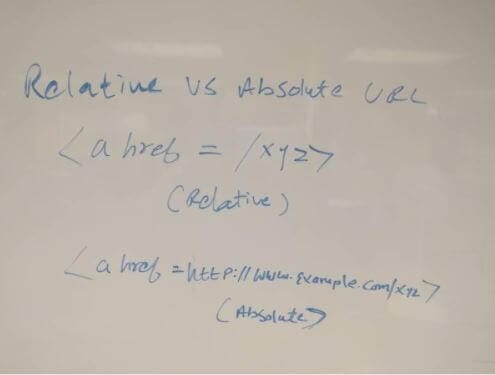

3. URL Structure Issue in Website Technical Audit

The site’s URL structure should be as simple and self-explanatory as possible. A logical URL structure directly corresponds to organizing your site’s content. The URLs should be most intelligible to humans (URLs should use readable words and not long ID numbers). For example, if you’re searching for information about beagles, the following URL can help you decide whether to click that link:

http://en.wikipedia.org/wiki/Beagles

Three most common URL problems

You may see them on different websites. Read further to check if your site has one or more of these problems. We’ve also listed the solutions so that you can fix them as early as possible.

Problem #1: Non-www and www Versions of Site URLs

Sites with a www version and a non-www version for all its URLs will experience a split link value due to the same content on the two URLs. So, you will not get a 100% link value on the page that you want to rank in your search results. You could be getting a 50/50, 60/40, or other kinds of split link value between the two URLs with the same or similar content.

How to Fix?

- First, decide the URL style you want to use, www or non-www.

- Set up a 301 redirect so that all the links to your non-preferred URL style get directed to the right style. It will prevent the wasting of link value due to splitting between two URLs.

- Go to Google Webmaster Tools. Set your preferred domain. Now your search result listings will be consistent with your style preference.

- Use the preferred URL style to build links to your site.

Problem #2: Duplicate Home Page URLs

This is a more severe form of the earlier problem because it is related to your home page. You may have many URLs that go to your home page content. If you haven’t fixed the www and non-www duplication, the problem will be more pertinent and can lead to undesirable duplication. For example:

http://www.mysite.com

http://mysite.com

http://www.mysite.com/index.html

http://mysite.com/index.html

You may have fixed your website for non-www and www problems, but they may still have multiple versions of their home page. There may be sites with several versions and different extensions (.php, .html, .htm, etc.), like in the example above. It would lead to a great deal of duplication and wasted link value.

All these URLs lead to the same content. Now, the link value will get split into the four of them.

How to Fix?

- Set the URL http://www.mysite.com to be your main home page. 301 redirect all other URLs to this URL. Choose the most basic URL as your preferred URL (For example, if non-www is your preferred URL style, then do http://mysite.com)

- At other times, you require having different URL versions for tracking purposes or similar reasons. In such cases, you need to tell the search engine to select the preferred version. Set the version you want to show up in the search results.

- Link to the correct version of the home page URL while building links.

Problem #3: Dynamic URLs

It is a more complex and not-so-SEO-friendly problem shopping cart programs may stumble upon. Including various variables and parameters in your URLs can create an endless number of duplicate content and wasted link opportunities.

Here is an illustration. This set of URLs may lead to the same content:

http://www.mysite.com/somepage.html?param1=abc

http://www.mysite.com/somepage.html?param1=abc&dest=goog

http://www.mysite.com/somepage.html?param1=abc&dest=goog&camp=111

http://www.mysite.com/somepage.html?param1=abc&dest=goog&camp=111&id=423

Even if you rearrange their parameters, they may still show the same content. It can immensely waste the link value of the preferred page.

There are several reasons for the dynamic URL problem. Many companies have statistical purposes of using these as parameter-based URLs. It is necessary to ensure that you are aware of the SEO perspective during this exercise and take measures to fix it lest it affects your outreach or other desirable results.

Affiliates that give their unique ID to use in their links also face the dynamic URLs problem. For example, if you have 100 affiliates linking to a page, each linking page’s URL will be different as each affiliate owns a unique ID.

The Fix

Your site should use SEO-friendly base URLs. Choose a base URL that leads to content in place instead of a URL that uses other parameters. For example, http://www.mysite.com/unique-product.html is much better than http://www.mysite.com/category.php?prod=123 some generic category page is each linking page’s URL base.

- Choose a canonical tag that directs the search engines to employ the base version of the URL. In this way, you can use parameter URLs like http://www.mysite.com/unique-product.html?param1=123¶m2=423 > It will help you in retrieving your data. Moreover, the search engines consider the basic version of the URL as the official one.

- Make sure that you capture the data on the server-side. Subsequently, redirect the visitor to the correct URL while the data gets captured. Using various affiliate networks like ShareASale and CJ, you can carry out the task reasonably simply. Typically, the following parameters help you reach the right visitor links-parameters

Visitor visits the site; the server records parameter data

301 redirects the visitor to the right landing page

The takeaway here is that the visitor hardly notices the switch to the other website as it happens fast. It helps you get the data you need and ensures that the correct page gets the link value while visitors can see what they want.

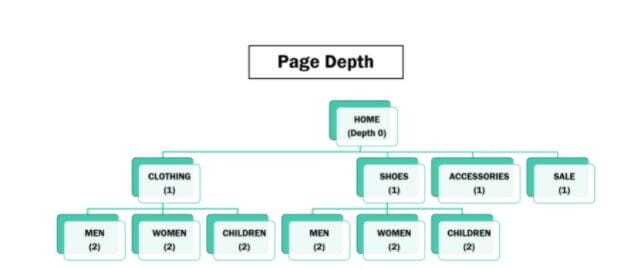

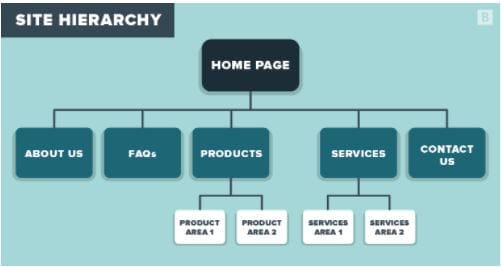

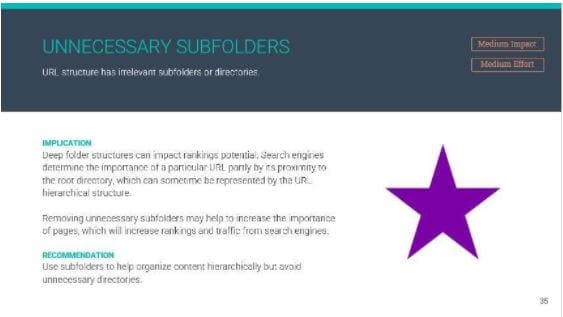

4. Unnecessary Subfolders

Irrelevant subfolders can be a potential deterrent to your site’s ranking. Search engines will evaluate the importance of a URL based on how close it is to the root directory link. Therefore, it is advisable to remove the unrequired subfolders and subpages that go further away from the root directory. This will surely increase the traffic and the rankings to the search engine. The best tip is to use a minimal amount of subfolders to get an excellent hierarchical organization of the content.

5. Unnecessary Subdomains

5. Unnecessary Subdomains

A domain represents the human-readable version of the internet address of a website. Every website must have a unique domain. This is because the domain is the website address. Like every home in a state or a country has its unique address. Similarly, the domain must have it for easy access.

A domain can be quite complex as well, having multiple parts and multiple levels. You can personalize the domain as per your requirements.

How Does Domain Subheading Matter?

Why Do Companies Use Subdomains?

It is widely known that blogs on subdomains offer scanty SEO value. But, several companies still segment their website to include subdomains.

In other cases, companies may own very old websites. So, it may be truly hard to add content in any other way than to add subdomains.

Subdomains are also vital for big time-limited promotions. For example, during an event or otherwise, you think of creating a microsite to host a contest- “contest.website.com” The obvious benefit you get is that you can easily remove it as the contest is over.

A subdomain can also be a good option for pages you don’t expect to give you value in your link-building efforts. You can create temporary ones which you don’t need to rank for anything important.

However, subdomains are also good when you want to segment your audience. Let’s take the example of Craigslist, which offers localized content on newyork.craigslist.com. The apparent reason is that only people in those areas want to see the content. In other cases, when you have an international website and deal with audiences from various regions of the world, offering your content in different languages is made simpler with subdomains. Or you may also have to provide your other products at varying price points. Using the subdomain structure makes sense in such cases. However, make sure you talk to an SEO consultant if a subdirectory wot=uld be a better option.

6. Generic Folder Structure

Here are a few expert tips on managing a practical folder framework for digital asset management systems.

Placing SPACES & UNDERSCORES

When you use an underscore, space, or other special characters while naming a folder name, it will have specific ramifications. For example, placing the underscore or unique character at the start of the folder name forces the folder to “float” above the alphabetically sorted lists. But this trick can make way for several problems. Because it means the folder is not present in its usual place. Consider this if someone is browsing for the “Projects” folder, they will most probably not look for “_Projects’ ‘ folder at the top of the list. They may expect something in chronology like between the “Marketing” and “Research” folders. They may create the duplicate “Projects” folder that does not have the underscore in front.

FOLDERS & KEYWORDS Have a Deeper Relationship

Few tools and Digital Asset Management (DAM) systems generate keywords based on the folders in which the files are stored. Setting up your folder structure, think about keywords to be applied based on folder names. For example, if you have a file in a folder called “Business campaigns” inside the “Marketing” folder will appear in search results for “Business,” “campaigns,” or “marketing.”

To get comprehensive guidance on folder organization, access the free DAM Best Practices Guide.

Folders are POTENTIALLY REDUNDANT

You should avoid folders with overlapping categories. If you have a folder named Staff and another folder named office, you do not want to include staff information in both folders. It would help to create more specific folders. Or eliminate one of the two or place one folder inside the other folder. For example, the “staff” folder could go inside the “office” folder.

EMPTY SUBFOLDERS “TEMPLATE.”

Keep an open group of folders and subfolders for a ready template. This will help in having subfolders that can be used across the folder structure, especially if you create folders requiring a common group of subfolders in the future. It will help you in getting things sorted and done quickly. All you need is to copy and paste the template of subfolders into new folders. You do not have to create each subfolder manually.

Sort out VERY DISORGANIZED FOLDER STRUCTURES

If things seem too messy, start fresh to build a new well-planned folder structure. Just move the existing items to the right place in a new structure. Or choose a cutoff date when the old location transforms into a read-only archive. No changes get copied to the new location.

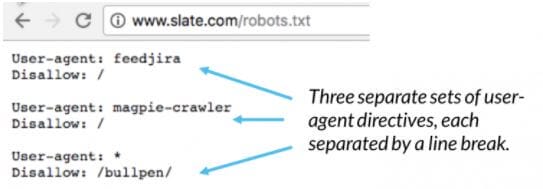

7. Tracking Parameters in the URL

The piece of code added to the end of a URL is known as the tracking parameter. It can then be separated into its components by the system backend for sharing information contained by that URL.

Some of the main groups of parameters to work with include:

Campaign parameters

Redirect parameters

Additional parameters.

Why are Tracking Parameters Important?

If a user clicks or views the ads, the advertiser seeks the information about the click or view. With a well-maintained record of such data, one can transfer the information about the interaction, helping to accurately measure the performance of that ad. Using the tracking parameters, you can capture snapshots at the time of the click. Also, you need to share the information that the campaign sparked the click. Also, look how the network served an ad to attract clicks and more.

What are the Benefits of Tracking Parameters?

Various parameters allow you the right level of specificity in reporting. They offer a space to provide the best levels of granularity to the captured information. This parameter helps to create a place where labels are attached to data. It enables the knowledge to get shared between systems. It also allows the information to be separated in a way allowing the humans to understand it.

What are the Types of Tracking Parameters in Technical SEO Audit?

A campaign parameter offers four levels of granularity using a tracker to provide a ‘space’ that helps to comprehend why a click or impression was done. Apart from that, these parameter spaces also capture the values with ‘creative,’ ‘campaign’ or an ‘adgroup’ for tracking parameters. The four spaces help to store information. One can drill down the data to get a greater context.

For redirect parameters, you can specify where the user must end up following an ad click. With a knowledge of the default tracker behavior, the user ends up at an app store page for your app. But perhaps you want a user to instead arrive at a landing page outlining a promotion? You would want a user to get a deep link directly on the product page within your app. This will help you to accomplish the redirect parameters. You have three redirect parameters for

Redirect

Fallback

Deep-link

These are parameters that will be powerful tools for driving conversions and enhancing UX.

On the other hand, additional parameters will also impact. The label parameter helps you to take care of all the additional information you require to transfer. But, it shouldn’t fit in a defined space like the one discussed earlier. Make sure that you also include additional cost parameters that allow you to track ad spend. You also need a referrer parameter that stores information about the referral programs in the server-to-server parameter. It enables an Ad Network to take care of the redirect to the store directly.

8. Session IDs in the URL

A session ID (SID) is a unique number a server assigns to the requesting clients. The word ID stands for an identifier that helps to track and identify user activity. The unique ID may be a numerical code, number code, or alphanumeric code. Technically, a session can be a temporary connection between a server and a client.

For search engine optimization (SEO), session IDs are a relevant topic for certain circumstances. And they can lead to problems like duplicate content.

Avoid Using Session IDs in URLs to Improve Your Search Ranking

Do not use Session IDs that will make search engine life interesting. A session ID will identify a particular person visiting a site at a particular time. It keeps track of the pages the visitor looks at and the actions the visitor takes in a session.

While requesting a page from a website, the server will send the page to the browser. As you request another page, the server will send that page also. However, the server is not aware if you’re the same person. Moreover, the server requires knowing who you are. This will help the server to identify you each time you request a page. The server does that with the help of session IDs.

Session IDs are used for various reasons. However, the primary purpose of a session ID is to allow web developers to create several types of interactive sites. Creating a secure environment, the developer can force visitors to visit the home page first. In other cases, the developers would like the visitor to resume an unfinished session.

A few systems can also help you to store the session ID in a cookie. But, the URL session ID of the user’s browser may be set not to accept cookies. An example of a URL containing a session ID:

http://yourdomain.com/index.jsp;jsessionid=07D3CCD4D9A6A9F3CF9CAD4F9A728F44

A search engine may recognize a URL included in a session ID. However, it probably won’t read a referenced page because whenever the search bot returns to your site, the session ID expires. The server will therefore do one of the following:

Display the error page in place of the indexed page. It is better to display an error page in place of an indexed page. Better still, go to the site’s default page. The search engine will index a page that is not present in session if someone clicks the link on the search results page.

Assign a new session ID. The URL that the searchbot originally used will expire; the server will replace the ID with another session and change the URL. Consequently, the spider will be fed multiple URLs for the same page.

If a search bot reads a referenced page, it may not index the same. Webmasters complain several times that search engines enter their site to request the same page again and again. However, the bot ultimately leaves without indexing the major part of the site. This is because the search bot gets confused due to the multiple URLs and leaves. At other times, the search engine does not recognize the session ID in a URL. If a client has thousands of URLs indexed by Google that are a part of the long-expired session IDs, they point to the site’s main page.

In the worst-case scenarios, the important search bots of a search engine will recognize session IDs to work around them. Apart from this, Google recommends that to use session IDs, you should employ a canonical directive to the search engines for the appropriate URL for the page. If you are using multiple session IDs, and your URLs look somewhat like this:

http://www.youdomain.com/product.php?item=rodent-racing-gear &xyid=76345&sessionid=9876

Hundreds of URLs can be effectively referencing the same page on the search engine. Use your section of web pages to put a tag on the correct page to tell Google about the page it should crawl.

Display

Session ID problems are now quite rare than they were earlier. Fixing a session ID problem helped sites that were invisible to search engines to get visible all of a sudden! Removing session ids can sometimes work like a miracle to fix a huge indexing issue.

you can do some more things if your website has a session ID problem apart from the canonical directive:

Use a cookie on the users’ computer to store session information. The server can check the cookie every time a page gets requested. It helps to check if the session information gets stored there. However, the server shouldn’t require cookies, or you can run into further problems.

Make sure that your programmer can omit session IDs when a search bot is requesting a web page. The server will deliver the same page to the search bot, but it does not assign the session ID. A search bot can travel throughout the site without the need to use session IDs. The process is used for agent delivery. Here, the word ‘agent’ refers to the device, which can be a browser, searchbot, and other programs requesting a page.

9. Broken Images in Technical Site Audit

Several broken images may also cause the same issues, like broken links, that we need to look at in greater detail. You will get broken links that are no good as they are dead ends for search engines. Moreover, this issue also leads to the search engines downgrading your website and lowering its visibility as they lead to a poor user experience.

How to Detect Broken Images on Your Website?

The easiest way to find broken links is using a special tool. Some of the highly effective common tools include Broken Link Checker and Free Link Checker.

“Site Audit” can offer you a detailed report about broken links and graphics. Tools like Xenu and Netpeak Spider are desktop programs that can help you get a non-existent link check. You can get the required report as you simply type your website address in any of these services to click a button:

10. Broken Page Links

Your website is a special asset where you need to put a lot of effort to ensure that it gets a lot of traffic. When your links are not working, it will take away all your hard work. They ruin your SEO efforts. Broken links will lower the flow of link equity throughout the site that can impact the rankings negatively. Being a top SEO Technical Audit Services Company, our team can offer the most comprehensive way to find and fix broken links.

You must check the broken links periodically on your website. Here is a step-by-step guide to helping you find and fix broken links present on your website.

Must Read: Broken Link Building Guide

Step 1: Track Broken Links

Several tools can help you to identify broken links. Google Analytics is one of the primary tools used for this purpose.

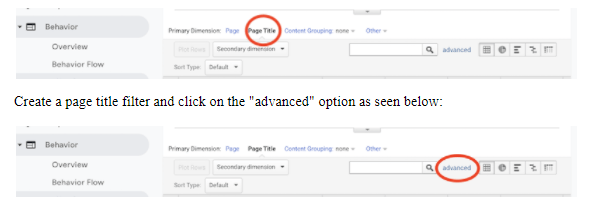

Google Analytics is one of the best free technical SEO audit tools to track website performance. It is instrumental to finding the broken links easily. Log onto your Google Analytics account. Click on the Behavior tab. Select “Site Content” followed by “All Pages.”

Set the evaluation period to check the data for the amount of time you want to check. For example, you want to check your broken links every month, set the period for a month after your last check.

You will see all your viewing options that are going to set everything to the default pages. Make sure that you select Page Title instead.

In the “advanced” window, set up the filtering that will go like this.

Include >Page Title>Containing>”Your 404 Page Title,”

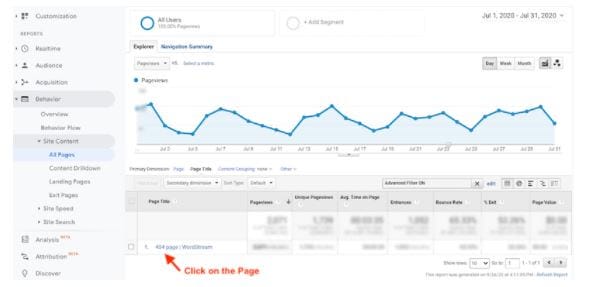

Click on “Apply.” It will present before you one or more page titles having the same name as the one depicted below for a one-month range.

As you click on the page title, you will see the various broken results on that 404 page. With a full-screen view, you can find the frequency of 404 error has occurred 2,071 times. As you reach the button of the page, you get more information as it has happened through 964 pages:

Export this report to a spreadsheet. Now you can proceed with fixing the links. You must know of all the places the broken links occur on your website. You may also have to set redirects to land on the intended page.

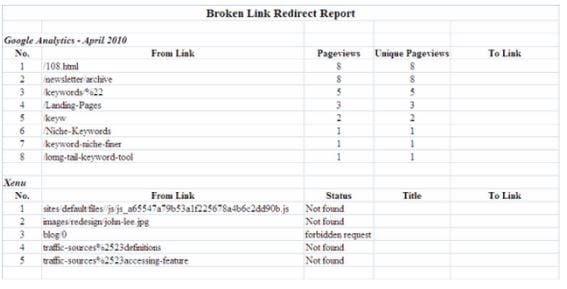

Step 2: Create a Report and Track Your Changes

You have the list of the broken links on the spreadsheet you’ve exported. Create columns on the excel spreadsheet to track link redirect processes. You will now have a Broken Link Redirect Report. Keep the data for broken links, page views, and unique page views in your Excel sheet.

As you export the data from Google Analytics, export it from Xenu as well. Now, organize the primary data from Google Analytics and Xenu into separate sections on your Excel spreadsheet.

Step 3: Decide Pages You Want to Redirect

Now, the preparation is done. Next comes the stage of analyzing. Google Analytics and Xenu offer you a list of links that can be broken. Now decide about the pages you want to redirect. But before you redirect them, make sure that you analyze the pages to discover why they are not working correctly.

From the Google Analytics section of the short sample above, we can see that not all broken links are created equal. It is important to understand that all broken links are created equal. Few links are visited quite often, and others are not visited in the same way. There can also be URLs due to human error. These are stray broken link URLs that you will visit only once. It is not worth fixing these kinds of URLs with a stray visit or two. But you must pay full attention to improving the broken links with multiple visits to fix them even if they are produced out of human error).

One can figure out the correct URL and fill the data in the spreadsheet for a few links. In case you go for other links, use a tentative URL and highlight it with a different color; you can link the other URLs to redirect them to the domain homepage. Conclusively, you just need to redirect the links with several visits and those ones having an error-causing rule.

The Xenu report does not display URLs recorded in your Google analytics due to typing errors. Xenu shows only the actually existing links that are live on the site. However, the URLs may still have certain character errors. We must identify these instances and dig out the reasons for the error. Only after this can you fix the problem in the most practical manner.

This step accomplishes your task once you’ve placed the links that must be redirected; document them all in your Broken Link Redirect Report.

Step 4: Redirect in CMS

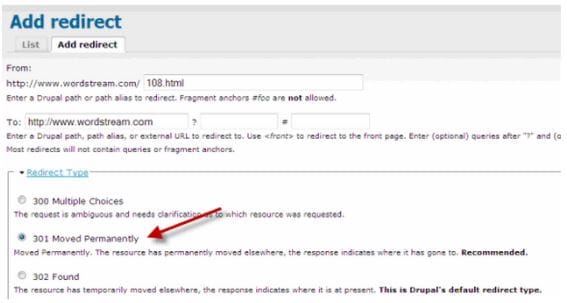

Here is an account of how you will redirect the links in your content management system. Let us use WordStream’s Drupal CMS as an example.

Access Administration – Site building – and then URL redirects. Add redirect. Fill the “From” and “To” blanks as you copy and paste the coveted link from your final Broken Link Redirect Report. Make sure that you notice the link format during the process. Choose “301 Moved Permanently” in your drop-down menu of the Redirect Type. Click “Create new redirect”.

Repeat the process to take care of all the broken links. Update the Broken Link Redirect Report.

Now you can experience more pride for your website as ALL your links work to help the users.“ The search engines are happy!

More on Broken Page Links

As we already discussed, broken links include web pages that users cannot find or access for several reasons. Web servers will return an error message if the user tries to access a broken link. Other ways to address broken links are known as “dead links” or “link rots.”

Broken Link Error Code

Here are few error codes a web server can return for a broken link:

404 Page Not Found: It gives the information that the requested page/resource is not present on the server.

400 Bad Request: It indicates that the host server is unable to understand the page’s URL. It marks the following:

Bad host: Invalid hostname: In this, the server name does not exist or is not reachable.

Bad URL: It is also addressed as a Malformed URL and may result in an extra slash, missing bracket, wrong protocol, etc.

Bad Code: It indicates an invalid HTTP response Code: The server response violates the HTTP spec.

Empty: The web server gives “empty” responses displaying no response code or no content.

Timeout: It occurs in the form of HTTP requests that get constantly timed out in the link check

Reset: In the reset option, the host server drops connections. It is busy processing other connections or gets reprocessed.

Why Do Broken Links Occur?

There are so many reasons why broken links occur, for example:

If the website owner enters the incorrect URL ( like misspelled, mistyped address, etc.).

If the URL structure of the site changes to (permalinks) without a redirect, causing a 404 error.

When the external site is no longer available, it becomes offline or gets permanently moved.

It also links to content (PDF, Google Doc, video, etc.) that has been moved or deleted.

interference due to Broken elements like HTML, CSS, Javascript, or CMS plugins.

Geolocation restriction not allowing outside access.

As we discussed earlier, broken links will affect the Google Search results. But, this wouldn’t impact the overall SEO. It is only if you have many broken links on a single page, it will indicate that the site is abandoned. The Search Quality Rating Guidelines use broken links to find out the quality of a site, but if you’re constantly checking the broken links or fixing them, it will help maintain the site’s quality.

11. Switchboard Tags

Much like canonical tagging, switchboard tagging is used to alert Google about another page similar to where the tag is located. It enables Google to index and rank the switchboard tags appropriately. One does not have to use them as separate sites that have the potential to affect your site’s rankings negatively. The specific tag is usually “bidirectional.” It is used on the desktop version and the mobile version.

At this link of Google Developers, you can find out what a tagging system usually looks like.

It means that you can place a similar tag similar on the desktop version of the website:

<link rel=”alternate” media=”only screen and (max-width: 640px)” href=”http://m.example.com/page-1″ >

However, you can place a tag like this one on your mobile version.

<link rel=”canonical” href=”http://www.example.com/page-1″ >

You need to implement both tags to make them work. If you have a mobile version of your site, you will surely want to take advantage of the switchboard tags. It offers a simple solution for a quick edit to help both versions of your site for their indexing and rankings.

12. Missing Breadcrumbs

Breadcrumbs (or breadcrumb trail) is the secondary navigation system. It displays a user’s location in a web app or a site. There are different types of breadcrumbs and the commonest ones include:

Hierarchy-Based Breadcrumbs

This is the commonest form of breadcrumbs that are generally used on a site. The breadcrumbs inform you about the site structure and the several you need to get back to the homepage. It can often be a sequence like this: Home > Blog > Category > Post name.

Best Buy gives you a good idea of where you are in the audio department

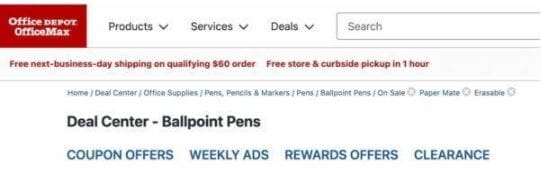

Attribute-Based Breadcrumbs

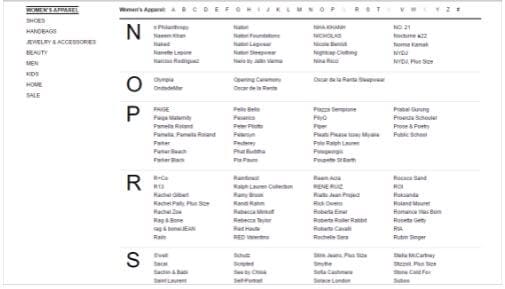

You will find these types of breadcrumbs on e-commerce sites, where the users search, leaving a the breadcrumb trail is made up of product attributes. for Example: Home > Product category > Gender > Size > Color.

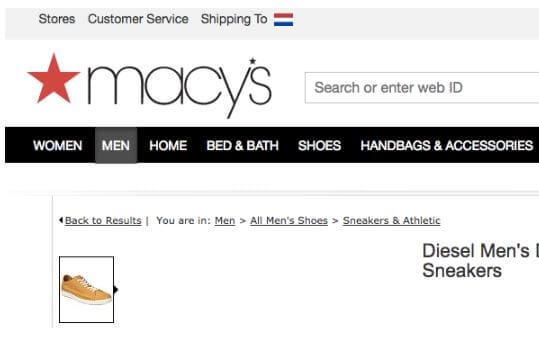

History-Based Breadcrumbs

History-based breadcrumbs carry out exactly what it says. You can order them as per your activities on the site. They will be seen somewhat like this- Home > Previous page > Previous page > Previous page > Current page. See Macy’s site example below to learn how you can make them function.

13. 404 responses without proper 404 page

13. 404 responses without proper 404 page

The 404 error (HTTP 404) is also known as a “header response code” or “HTTP status code” or just a crawl error. It corresponds to the “Not Found” or “Page Not Found.”

Check the definition for 404 errors below.

“The server has not found anything matching the Request-URI. No indication is given of whether the condition is temporary or permanent. The 410 (Gone) status code SHOULD be used if the server knows that an old resource is permanently unavailable and has no forwarding address through some internally configurable mechanism. This status code is commonly used when the server does not wish to reveal exactly why the request has been refused, or when no other response is applicable.”

– via w3.org

Put simply; it means that code tells users and the search engines that the requested URL or the resource being referenced literally cannot be found.

Imagine a robot giving a shrug and a blank look.

There can be varied kinds of response error types. Most URLs will return a response code of one sort or another. A correctly functioning page returns a “200” status code, which translates to “Found” in the computer language. The other response codes include the “server errors,” which include the HTTP status codes 500-599. Webmasters can diagnose the different error types going to the source of the errors to fix it appropriately.

404 errors is the common error type. They are generally handled incorrectly by even knowledgeable people. A 404 HTTP error is also known as a Client Error. The user’s web browser (Google Chrome, IE, Firefox, etc.) is addressed as the client here.

Occurrence of 404 Errors

A 404 error can occur in several conditions. For example, if a page has been removed or moved to a different website section and it stands nonexistent or like a deleted page that is forgotten and not redirected)

If a webmaster, or a software engineer mistypes the URL on a page or a “page template” or ends up with a copy-and-paste mistake (using a wrong URL link).

When web pages, social media posts, or email messages get broken links or accidentally truncated links.

The best type of soft 404s occurs when a page issues a 200 (OK) status in issuing the right kind of error for something that went wrong. In these cases, websites could issue 404 errors, but they do not. It indicates that 404 errors on the page are not working properly. At times, 404 errors can function in one part of your website but not in others.

Are 404 Errors Good or Bad for Search Engine Optimization (SEO)?

Practically, 404 errors are “bad” as they display errors on your website or on your web, relating to your website.

It is not hard to understand that a user coming across a 404 page will not want to return to that site later.

If a site has a lot of 404 header response errors or other errors like 403s, 500s across it, that can create a higher overall error rate versus success rate.

When Google finds many errors, like 404 errors compared to available pages, it can result in a lot of trust issues. It won’t like to send customers to your site. Google rates websites based on user experience and if a website is just full of errors, it gives users a bad experience. Google will likely downgrade your site for the trust issues.

If your website is linking to many non-functional pages, you are passing SEO equity into a void. It’s just like a purse with many holes in it; the money you put in is the money that drips out of it.

However, 404 errors will keep happening no matter what. But, the important thing when these errors do occur, they should be properly functioning. They must notify you that the 404 error occurred and its location. With this information in hand, you can go about fixing the error with a permanent 301 redirect. Keep in mind that the user and search engine should not have to face this issue again.

404 is seen as a bad error by few teams more than others. But the reality is errors will occur if you do not listen to the messenger” and want to correct the mistake; a 404 error ode will help identify that. It is necessary to issue a proper 404 status code to find issues and fix them easily. It’s only the unfixed 404 errors that will be potentially bad.

14. Bad URLs Do Not Return 404 Status Code

The web server should show you a 404 page. But you won’t find it’s you click on it. However, the functionality of the 404 code is not restricted to this alone. It will also tell the Google Bot where the document really exists. It means the page should return the appropriate HTTP status code or else it is defective.

How Do I Return the Correct HTTP Status Code for a 404 Error Page?

Content-Management-System or the web servers are generally not set up correctly. This leads to an error in the page that returns to either the HTTP status code 200 (OK) or a 301-redirect (sending the user and Google-Bot to another page). This will be a defective 404 page.

This article finds out information on the correct configuration of a 404 error page having an appropriate HTTP status code 404. It tells you the difference between:

A static 404 error page with the use of the .htaccess file and Apache webserver

The 404.php file and WordPress CMS in the theme-directory

15. Are the Session IDs of your Site Functional?

A website assigns a session ID, also known as a session token, to keep track of visitor activity. This is a unique identifier parameter for a specific user. It has a predetermined duration of time or session,

Two common uses for session IDs

User authentication

Shopping cart activity

One can use a standard method of delivering and storing session IDs with cookies; but, it becomes less feasible because a session ID also gets embedded in a URL in the form o a query string. And URL parameter session IDs and the Cookie impact the proper crawling and indexing of the content by the web search engines.

What happens in Session IDs Using Cookies?

Typically, cookie-stored session IDs are more secure as compared to the ones transmitted via URL parameters.

That said, an issue arises as Search engines ignore cookies.

But you can solve it.

This is how.

Provide alternative pathways to search engines to access web pages if you are using cookies to present content, either in the sitemaps or direct links.

To find out if cookies are preventing the bot from crawling your page, “Fetch as Googlebot” related to Google Webmaster Tools account,

URL Parameters for Session IDs

You can use URL parameters for session IDs. It will help in tracking visits or referrers. In such a case, you have to append the identifier to the URL.

The glitch that arises here is that new URLs created for an existing web page give rise to content duplicity issues. Check the URLs that point to the same content:

http://www.example.com/products/women/dresses?sessionid=66704

http://www.example.com/products/tools?sessionid=45365

http://www.example.com/products/tools?sessionid=45365&source=google.ca

How Can You Deal With This?

Here’s how.

Use canonicalization (described in detail in the article), whereby you tell the search engines the correct version of the URL you want to index.

Moreover, Google offers a URL parameters tool in its ‘Crawl’ section of Webmaster Tools. Using this, you can instruct on how Google must handle URLs containing parameters.

16. JavaScript Navigation

JavaScript Navigation is an essential part of technical SEO is JavaScript SEO. It involves making JavaScript-heavy websites search-friendly, enhancing their crawlability and indexability. The ultimate goal is to make the websites rank higher and get more visible in the search engines.

Many consider JavaScript bad for SEO. But is it true that JavaScript is evil? Not at all. It’s quite different from what most SEOs professionals are used to handling. But by putting in a slight effort, you can learn much.

Javascript is not perfect, and it may not always be the right tool for the job. But if you parse it more than you should, it can result in heavy page load and low performance.

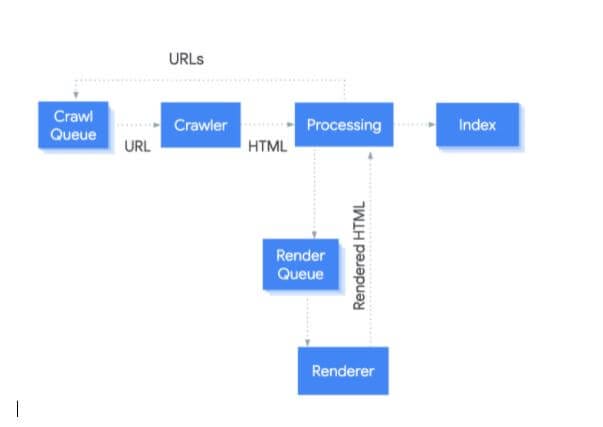

How Google Processes Pages with JavaScript

Earlier on, a downloaded HTML response could let you see the content of most web pages. However, with an increase in JavaScript, search engines must develop several pages like a browser to find out how a user sees the content.

Web Rendering Service (WRS) of Google is responsible for processing the rendering of pages. This image tells how Google covers the process.

Start the Process at a URL

First of all, the crawler comes into the picture:

Crawler Action

The crawler will send GET requests to the server, which response with headers and the file’s contents.

Google prefers mobile-first indexing, the request generally comes from a mobile source or user agent. Use the URL Inspection Tool in the Search Console to check and find out how Google crawls your website. Run the URL on the too; check coverage information for “Crawled as,” you will know if you are still on the desktop indexing or mobile-first indexing.

In this case, the requests are coming from Mountain View, CA, USA, but they will also do some crawling for locale-adaptive pages outside of the United States. But few sites will block visitors from specific countries or the ones using specific IP in various ways. This could imply that the Google bot cannot see your content.

Few sites can also employ user-agent detection to display content to various kinds of crawlers. Especially for the JavaScript sites, Google could see things as being slightly different than a user. The URL Inspection Tool present in Google Search Console provides for Rich Results Test and Mobile-Friendly Test. These tests are important for handling JavaScript SEO issues. They offer you information on what Google considers important for checking. For example, if Google is blocked, and they can still see content on your page. You will find more information about this in the section on renderer as there are vital differences in the downloaded GET request, the rendered page, and the testing tools.

Moreover, it is worthwhile to note Google may state the output of the crawling process for “HTML” as illustrated in the above image. However, Google is crawling and storing all their sources required to build the page, whether they are Javascript files, CSS files, HTML pages, XHR requests, API endpoints, and more.

Processing

Understanding the term processing mentioned in the various systems is essential. Read further to know about the several aspects of processing related to JavaScript.

Resources and Links

You need to understand that Google does not navigate from page to page like a random user. Instead, one part of the processing checks a page for its links to the other pages or files required to build a page. In many instances, Google pulls out the links to add them to the crawl queue, which is Google’s tool for prioritizing and scheduling crawling.

Google is also likely to pull resource links (CSS, JS, etc.) used for building a page from sources like <link> tags. But the links to other pages should be present in a specific format so that Google treats them as links. The different internal and external links must have a <a> tag with an href attribute. You can help the process proceed in several ways for the users with JavaScript that are not search-friendly.

Good:

<a href=”/page”>simple is good</a>

<a href=”/page” onclick=”goTo(‘page’)”>still okay</a>

Bad:

<a onclick=”goTo(‘page’)”>nope, no href</a>

<a href=”javascript:goTo(‘page’)”>nope, missing link</a>

<a href=”javascript:void(0)”>nope, missing link</a>

<span onclick=”goTo(‘page’)”>not the right HTML element</span>

<option value=”page”>nope, wrong HTML element</option>

<a href=”#”>no link</a>

Button, ng-click, there are many more ways this can be done incorrectly.

The internal links added with JavaScript do not get picked up till rendering has been done. Therefore, the rendering must be quick and not become a cause for concern in most cases.

Caching

As Google downloads files of different kinds, including JavaScript files, CSS files, HTML pages, they will be aggressively cached. Typically, Google ignores your cache timings and fetches new copies when it wants. You will understand more about this in the Renderer section coming ahead.

Duplicate Elimination

When crawlers find Duplicate content, Google will eliminate or deprioritize it from the downloaded HTML in rendering. With the prevalent use of the app shell models, small amounts of content, you might find a code that may result in the HTML response. Every page on the site will show the same code.

Moreover, this code can also be shown on multiple websites, giving an idea of the pages to be treated as duplicates. This means that the pages would not immediately go to render. And there can be greater implications like a wrong page or the wrong site may display in search results. However, the chances are that it resolves by itself over time. This can, however, be problematic, especially for newer websites.

Most Restrictive Directives

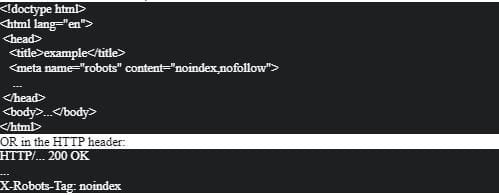

Google will choose highly restrictive statements between the rendered version of a page and HTML. If JavaScript changes a statement and that conflicts with the statement from HTML, Google will simply obey whichever is the most restrictive. Noindex will override index, and noindex in HTML will skip rendering altogether.

Render Queue

Every page goes to the renderer now. One of the biggest concerns from many SEOs with JavaScript and two-stage indexing (HTML then rendered page) is that pages do not get rendered for days or sometimes even weeks. According to Google, pages reach the renderer at a median time of 5 seconds. The 90th percentile was minutes. Thus, the amount of time between getting the HTML and the rendering of the pages generally is not a cause of concern.

17. Flash Navigation

Flash navigation is created to catch the immediate attention of the site’s visitors. It is found to work in many cases. However, the real question is whether flash navigation can get the search engine’s attention? Flash is generally used for slideshows and flash movies present on technological sites. It displays multimedia content on art and entertainment sites. Websites requiring search visibility are popular on web and flash websites. However, the flash elements on a website were unreadable by the search engine crawlers until June 30th, 2008, when Google announced its improved capability for index flash files for including them in their search results.

However, Flash websites or flash elements on websites also have other problems. For example:

No Inner Page URLs: If your website is built on Flash, it will be just one URL: the home page URL. With proper internal page links, you get very few opportunities to let your flash website show up in search results.

Slower Page Loading Times: Complete flash websites with a lot of flash content typically load slower. This problem will significantly reduce with better internet download speeds and browser compatibility.

Poor User Experience: Flash navigation websites are unconventional. They force the user to spend more time finding the right content on a flash website. If the site offers a compromised user experience, it becomes hard to rank the website high in the search engines like Google.

Indexed by Google Bots Only: Google is a search engine that officially indexes Flash. The search engines are text-based and index sites according to the HTML content. However, there can be glitches in Google indexing the flash websites.

Poor On-Page Optimization: Typically, flash websites or flash elements do not have on-page SEO elements like a header, image alt tags, meta title, meta description, and anchor text tags.

No Link Value: Search engine crawlers are unable to crawl links present in the Flash. A completely flash-based website may have only one link, such as a home page, and no internal page links. That’s why you do not get any link value for flash-based websites.

Hard-to-Measure Metrics on Google Analytics: Flash-based websites cannot track user behavior on the site. It is hard to understand the site performance metrics of flash-based sites.

According to research, more than 30 to 40 percent of websites use Flash. But, still, these sites get organic traffic from search results. At the same time, some of the flash sites belong to big brands that get popular by several other modes, such as offline marketing. Thus they get many natural links to their site. This naturally improves their search engine ranking. But other not-so-popular sites must incorporate SEO into their flash-based websites to optimize the site for search engine indexing.

Here are a few quick tips for entirely or partially flash sites that need to use SEO for a well-optimized site that grabs Google’s attention.

Use Multiple Flash Files

First of all, do not get your complete website designed in Flash. As discussed, it makes it difficult for sophisticated Google bots to index the site. Instead, split the distinct flash content into several separate files in place of a big file.

Add HTML Element to Flash Files

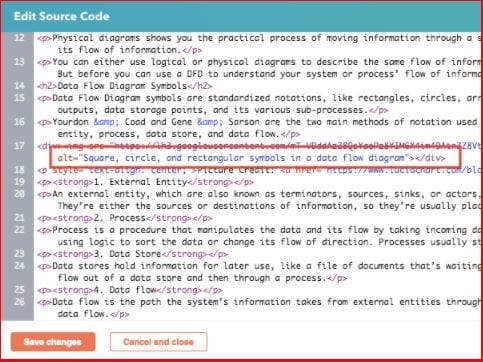

Search engine crawlers look for text content, and the HTML text is in a highly readable form than the one embedded in flash files. Moreover, Flash does not display external links like HTML. So, you must add HTML format content to your flash files. This will help these files to get seen by search engine bots. As stated earlier, flash files will do better when these are segregated into separate files. Each flash file must have a corresponding HTML page.

You should embed the flash files in the HTML while adding descriptive data to the site. This includes adding page titles, headers, image alt tags, anchor text, and metadata. In general, it can be advisable to avoid Flash as much as possible and also ensure using HTML for the most important elements of the site. Use regular text links as much as you can. Check the sample of two flash objects embedded in HTML for facilitating search engine indexing.

Optimize Flash Sites for All Browsers

While you optimize your flash website for SEO, do not forget that usability is another factor that must be taken into account while building flash-based websites. You can get an enhanced user experience. It will be an important factor for the search engine optimization of the sites. It is important to optimize the flash-based sites for your browsers to give enhanced usability. Use the Scalable Inman Flash Replacement method (slFR) or the SWFObject Method to learn more about the flash content into simple vanilla if a browser does not support Flash.

Avoid Using Flash for Site Navigation

Make sure that Flash should not be used for navigation on the website for two important reasons. The first reason is to avoid confusing navigation options. Improper navigation will interfere with the site’s usability. Secondly, the web analytics that tracks and measures your site’s performance does not give accurate data. It can be hard to measure the data, like the visited pages and also the parts of the site with a higher viewership. It also helps to track the stage when the visitor abandoned the site on flash-based sites.

Importance of Proper Sitemaps in SEO Technical Audit

Sitemaps facilitate the indexing of several pages by search engines. In the case of websites using Flash, it is important to use XML sitemaps. They are created to put them into the root directory.

The takeaway is that you should avoid using completely flash-based web designs because they are hard to be crawled and indexed.

And always build corresponding HTML pages for the flash pages of your website. This will allow for the easy indexing of your website, allowing your website to show up in search results for your keywords. If you’re using Google Analytics for tracking the performance of your site, then you must enhance the tracking of the flash elements by setting up special goals and funnels.

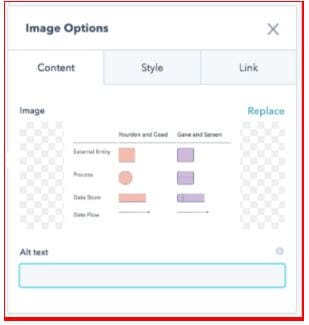

18. Images Used in Navigation

It is necessary to correctly use the images as this will help readers to get a better insight into your article. Though Google gives greater importance to the text, it also counts the images you have used. Your users will certainly appreciate getting a summary of what you are looking for in a chart or data flow diagram or get attracted to the attractive images on your social media posts.

Adding relevant and appealing images to all articles you write will prove helpful.

It will also make them more appealing. But you should also optimize the alt text of your images. The images are important for making your visual search increasingly important. You can see this in Google’s vision for the future of search. It will help to increase your organic traffic. Having visual content on your site makes complete sense to enhance your SEO. Therefore, you must place image SEO as a priority in your to-do list.

Finding the Right Image

It is better to use original images, like your product images or your photos of yourself or your workplace, in place of using stock photos. Having a team page with the pictures of your actual team will count greatly in increasing the authority and the trustworthiness of your website.

Your article requires an image in tune with its subject. Do not just go on to add a random photo to get a green bullet in your Yoast SEO plugin’s content analysis. The image you choose must:

Reflect and relate to the topic of your post

Place the image near the relevant text.

For the main image that you’re trying to rank, keep it near the top of the page, but it should not feel forcibly placed.

Images placed with related text rank better for the keyword they are optimized for. Let us find out about SEO images as we proceed further in this article.

Scale for Image SEO

Page Load speed is an important characteristic of user experience. Loading times are highly important for UX and SEO. The faster the site, the easier it is for search engines and users to index and use its pages. The pixels of the images can have a great impact on the loading time of the site. When you upload a large image to display it is really small; for example, in a 2500×1500 pixels image displayed at 250×150 pixels size, it stalls the loading process of the entire website. Therefore, resize the image and choose how you want it to be displayed. WordPress will help you to do this automatically as it offers multiple sizes of the image after you upload it. However, this does not mean that the file size is optimized as well. Take care of the size in which you upload your images.

Use Responsive Images

Most of the users access your site on their mobile devices. It means all your images on the website should be responsive. It will be readily done for you in WordPress as it was added by default from version 4.4. Moreover, the images must have the src set attribute, making it possible to serve a different image according to the screen width of the mobile devices.

19. 302 Redirects

A 302 redirect is one of the temporary redirects that helps users and search engines to land the desired page for a particular amount of time until it gets removed. It will be displayed as

302 found (HTTP 1.1)

Moved temporarily (HTTP 1.0).

A 302 redirect is done using a meta tag or JavaScript. So, there is less time spent on it than a 301 redirect which requires accessing server files taking additional time and effort.

Webmasters also prefer 302 redirects rather than 301 redirects. A few people hope to avoid Google’s aging delay related to a 301 redirect. But you must know the utility of both the redirects and only then use them appropriately because improper use can become an issue for the search engine. Google then might have to consider if the applied 302 or 301 redirects were meant to improve the search engine experience.

Many times, Webmasters simply use a 302 redirect when a 301 redirect was needed, just because they did not know about the difference. It can negatively impact your search engine rankings. Additionally, there can be problems like continued indexing of the old URL, and it may also lead to division link flow between several URLs.

When Should a 302 Redirect Be Used?

Knowing when to apply a 302 redirect is important. Use a 302 redirect for:

A/B testing for a webpage’s functionality and design.

Getting client feedback for a new page without having an impact on site ranking.

Updating a web page while providing viewers with a consistent experience.

Broken webpage, and you want to maintain a good user experience in the meantime.

302 errors are temporary redirects that are used if webmasters want to assess the performance or gather feedback. The redirects are not used to give a permanent solution

20. Meta-Refresh Redirects

We have talked about various web server redirects. But there is another category of redirects known as meta refresh redirects. This is a client-side redirect, unlike 301 and 302 redirects that will occur on the web server. A meta refresh redirect will instruct the web browser to reach a different web page within a specified time.

Example:

<head>

…

<meta http-equiv=”refresh” content=”4; URL=’https://ahrefs.com/blog/301-redirects/'” />

…

</head>

Meta refresh redirects are generally seen in the form of a five-second countdown with text that goes like “If you are not redirected in five seconds, click here.”

Google claims to treat the meta refresh redirects or other redirects; you are not recommended to use these, barring certain special cases.

For example, if you are using a CMS periodically to overwrite your .htaccess file. You can also redirect a single file in a directory using multiple files. Sometimes, a meta refresh redirect may lead to a few issues. For example,

It can confuse the users. If a redirect happens too quickly (like within 2-3 seconds), and the users of the browsers cannot click the “Back” button. Moreover, the users may experience page refreshes they did not initiate. This can be a cause of concern for your website’s security;

Meta refresh redirects may also be used by spammers to disorient search engines. If you use them quite often, search engines can consider your website being spam and deindex it.

The meta refresh tag does not contain a significant amount of link juice.

Thus, if it is not specifically needed on your pages, you can use a server-side 301 redirect in its place.

21. JavaScript Redirect

A JavaScript redirect offers an optimal way of redirecting because search engines need to render a page to get a redirect. Redirecting using a 301 redirect is always recommended (except if you’re looking to temporarily redirect).

JavaScript redirects are typically picked up by search engines, and they will pass authority.

If you do want to use JavaScript redirects, please be sure to receive consistent signals.

It will include the redirect target in the XML sitemap.

Allow for updating the internal links for pointing to the redirect target.

You can also update the canonical URLs for pointing to the redirect target.

Ways to Implement JavaScript Redirects

There are methods to recommend a function to redirect like with window.location.replace().

You can get an example of what a JavaScript redirect asunder:

<html>

<head>

<script>

window.location.replace(“https://www.contentkingapp.com/”);

</script>

</head>

</html>

This code sends the visitor to https://www.contentkingapp.com/ after the page loads.

The biggest benefit of using the window.location.replace function is that the current URL does not get added to the visitor’s navigation history. On the other hand, the popular JavaScript redirect window.location.href gets added. It can lead to a visitor being stuck in the back-button loops. Thus, don’t use Javascript redirect to redirect visitors to another URL immediately.

<script>

window.location.href=’https://www.contentkingapp.com/’;

</script>

22. Broken JavaScript & CSS Links

It’s no secret it requires a lot of hard work to run the site. It means that you must fix broken links as you find them. Otherwise, it can prove detrimental to all the hard work you have done earlier.

The problem of broken links can be a lot worse with outbound links. You will not be getting notified of the change in your linked website. Therefore, you should have a daily check to see whether all of your links are still working.

Broken Links Can Be Very Harmful to Your Site in Two Ways:

Destroy user-experience. When users find a dead-end link like 404, they feel annoyed.

Bring down the SEO effort. Broken links will curb the link equity flow on the site that will have a negative impact on the rankings.

Make sure that you prevent these kinds of broken links on your entire site. Read the possible solutions further.

Why Broken Links Are Bad?

Broken links create an impression of callousness. If the users get a 404 Page error, they will think as if you don’t take your website user experience and content pretty seriously.

Broken links can be very frustrating. Having broken links on the website translates to disappointing your site visitors. When visitors cannot find what they want, they feel annoyed and do not get a good impression of you.

Fixing Broken Links

Fixing the broken links gives an impression that you will be serious about keeping your site fresh and alive. SEO will surely be highly effective if you are updating your site regularly. It signals the search engine sites that you are maintaining the site.

Broken links can greatly impact your site’s SEO. When all the links on your site are working, it ensures that your site is more accessible on the search engines. However, if you don’t fix these links, your site will get a negative rating for search engine optimization.

Reason for Broken Links

Here are a few common reasons why a link may become broken:

The web page is not present anymore.

The server hosting the target page has stopped working.

Blockage due to content filters or firewalls

23. Unnecessary Redirects

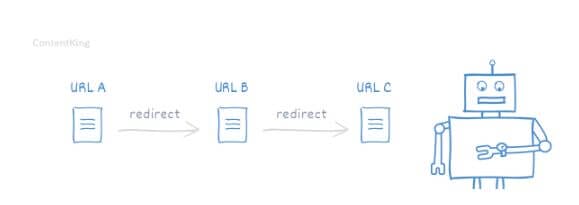

A redirect chain will make the user get multiple redirects between the initially requested URL and the final destination URL.

Consider this, URL A redirects to URL B, which it redirects to URL C. It means it would take a longer time for the final destination URL to load both for visitors and search engine crawlers.

Redirect chains are an impending problem. Some reasons for these include:

- Oversights: In this case, the redirects are not noticeable to the human eye. It means that while employing a redirect if you are not aware of where you are placing it, you’ll unknowingly create a redirect chain and won’t even notice it.

- Site Migrations: Several times, people forget to update their redirects as website migrations occur. For example, if you’re switching from HTTP to HTTPS or changing the domain name, you might implement more redirects without updating those already present.

How do Redirect Chains Impact SEO?

Redirect Chains can have a bad impact on SEO in various ways.

- Delayed crawling: Typically, Google will follow just up to five redirect hops in a crawl. Following this, Google aborts the task to avoid potentially getting stuck and saving crawl resources and. It will have a potentially negative impact on your crawl budget and lead to indexing problems.

- Lost link equity: Keep in mind that all page authority or link equity will simply pass through a redirect. Every hop in the redirect chain decreases the page authority passed on. Consider this, there are three redirects in a chain, and you are losing nearly 5% of link equity for each redirect. This implies that the target URL gets only 85.7% of the link equity from originally passed on.

- Higher page load time: Redirects lead to higher page load times for users as well as search engines. This further leads to crawl budget loss. Whenever a search engine bot gets returned with a 3xx status code, it will request an additional URL. Otherwise, the search engine bots need to wait, but that means that they get less time to crawl other pages.

How to Clean Up Unnecessary Redirects?

Sites that have been around for a while on the internet gather a cluster of 301 redirects. Most of these redirects offer you authority and are important to keep your traffic stable.

However, you will come across redirects that get stale and stop offering any SEO value they once did. This is because the redirects were built for specific purposes, such as removing old site architecture from Google’s index or removing old vanity URLs.

In such cases, when few redirects stop offering any value to the site, consider cleaning them up as each time the browser looks for the URL, all of those redirects are checked to determine if the requested URL must be sent elsewhere. It can have a serious effect on your load time and result in the users leaving your site. In addition, it can be the reason for redirect chains, as discussed above.

As a marketing specialist you sometimes come across internal links with ‘rel = “nofollow”‘ attribute. It might seem like a good idea to add ‘nofollow to’ unimportant internal links in order to let pagerank flows to the important parts of your page. Unfortunately it is not. We explain why below.

Nofollow is Meant to Prevent Spam

Links on a page pass page rank to other pages. By adding the nofollow attribute tp a link, we ensure that this link no longer passes on a page rank. This nofollow attribute is designed to prevent spam. Webmasters can add the nofollow attribute to links in comments and on forums in order to counteract ‘comment spam’. For example.

<a href=”https://www.example.com” rel=”nofollow”>look at my homepage</a>

Internal Nofollow, A Bad Idea

Internal nofollow links are actually always a bad idea. It is a wrong solution with more adverse consequences than you may have thought. Google’sd Gary Illyes also tells us that internal nofollow should not be used.

For all types of problems there are better solutions than ‘nofollow’

Nofollow does not shape pagerank like you would expect. Nofollow works like a black hole and loses pagerank.

Nofollow indicates that you do not trust a page (enough), which can lead to a higher spam score.

Better Solutions to ‘nofollowing’ Internal Links

There are a number of reasons to use internal nofollow links. You will find the most important ones below with additional tips for better pagerank distribution,

1. The Receiving Page is not Important Enough

This is actually the classic ‘pagerank scultping’ argument.

Pagerank is not otherwise controlled by nofollow but rather evaporates. Moreover, the use of nofollow stops the ‘pagerank flow’. You actually build a dam while you want to move on like that.

If a page is really not important enough, it is best not to link to it.

2. The Receiving Page is of Low Quality

Ai ai ai, you are now trying to hide the fact that you have poor quality pages on your site by masking internal links to those pages. Unfortunately, this is not how it works, Google will detect these pages anyway and will adjust the quality score of your site accordingly. Poor quality pages should be improved or excluded from the index via the robots.txt or meta tags / server headers.

3. The Receiving Page is a ‘Duplicate Content’ Page

Many web stores use / abuse the nofollow attribute at the category level. Product overview pages can be sorted in many ways but the content remains the same. This has duplicate content. Some webmasters have given the links to these pages a nofollow.

Also this is not solved with an internal nofollow but with a canonical where you indicate that the original of this page can be found elsewhere.

25. Site Contains Page Errors

When you click a URL to a site or enter the same in the address bar of any browser, the page will load your device. However, at times, something may go wrong, resulting in an error.

There can be several types of website errors leading to the above situation. Due to this, the URL gets assigned a three-digit HTTP status code.

Typically, you are likely to see error codes in the 400-499 range. These will reflect an error on the user’s side (the web browser). But when you get status codes in the 500-599 range, they indicate a problem on the server-side. Here are some of the commonest errors you encounter for browsing the internet.

Bad Request: Error 400: This is a generic error you get when the server cannot understand the browser’s request. It can occur due to several reasons.

It was not set correctly.

It was corrupted along the way.

Several factors lead to 400 errors.

A bad internet connection.

A caching issue.

A browser malfunction.

Always ensure clearing your cache, check your connection and settings, try different browsers, or simply retry.

Authorization Required: Error 401: The 401 error generally occurs when you access a password-authenticated web page. The user has to obtain the right password through the proper channels.

Forbidden: Error 403: If you try to load a web page that you don’t have permission to access, you will get a 403 error. It means you have entered a URL or clicked on a link going to a page having the set up for access permissions. It means you must use the account with the right type of authorization to access the page. Reaching the website’s home page first, you can go to the desired location. Find out if there is an option for account signup.

Not Found: Error 404: This is one of the commonest errors that says that the server cannot find the page you’re looking at. It might have occurred because you have not entered the correct URL. Therefore, check thoroughly for the spelling, punctuation, and suffixes (.com, .net, .org, etc.) of the domain name and try again.

Method Not Allowed: Error 405: The 405 error is less common and is not as easily defined as the others. When the server can comprehend what the web browser is asking for, it refuses to fulfill the 405 results. The error can also be the result of a faulty redirect due to the website’s code.

Internal Server Error: Error 500: The 500 is similar to the 400 generic code error pointing out unspecified problems. If a server faces an issue to concede to a request due to reasons that do not match other error codes, 500 error results.

Service Unavailable: Error 503: The 503 error signals that the web server cannot process the request due to certain reasons:

The website is undergoing maintenance

Overloaded with requests.

It is best to try again later to override the 503 error.

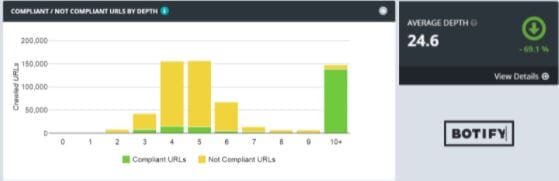

26. Incorrect Faceted Search

Faceted navigation is the most common issue in eCommerce sites. It gives the website visitors to filter results choosing from facets, a collection of sortings and details, and specific enhancements.

Usually, a large selection of products on any category page can be overwhelming to the visitors. The best way to give them a great UX is to provide them with relevant facets that allow them to narrow down their search results to easily find the ideal product they are looking for. Each filter will append additional parameters to the category page’s URL and generate a few unique page versions. Typically, these filters are used in unlimited combinations, causing a 100-page strong domain to form thousands or millions of indexable URLs.

Here is an example of a page of the shop with T-shirt category Izzi Shop-izzishop.com/products/tshirts/ offers several facets to the user for narrowing down results parameters such as:

Color

Style

Pricing

Brand

Size

Material

Features like cats on the shirt

And more.

The base URL transforms into several different versions on applying all these facets, each of which indicates a uniquely indexable page. See below.

- izzishop.com/produkte/tshirts/?size=14

- izzishop.com/produkte/tshirts/?price=0-20?contains-cats=yes?color=purple

- izzishop.com/produkte/tshirts/?style=baseball?price=20-50?size=12

- izzishop.com/products/tshirts/?size=14

- izzishop.com/products/tshirts/?price=0-20&contains-cats=yes&color=purple

- izzishop.com/products/tshirts/?style=baseball&price=20-50&size=12

Sometimes, it makes perfect sense to index the faceted navigation URLs. However, it is possible only if you have significant demand and will get potential value for enough products to justify the sole existence. It provides a worthwhile search demand for a lucrative long-tail query like “black leather shoe size 42”. It’s vital to ensure a domain has an optimized URL for targeting that search term.

Faceted search is thus an intelligent strategy for SEOs to analyze the performance of this type of URL properly. The strategy will help optimize all the valuable pages while cutting out all the useless weight that lowers the strength of the entire domain.

Impact of Faceted Search on SEO?

Due to the higher usability of a faceted search for your website’s visitors and the product browsers, faceted navigation can lead to several critical issues in the search engines.

John Mueller has created the highest quoted points of 2018 as the “crawl budget is overrated.” It is true for the most part.

Several domains with a keen SEO eye seem to be concerned about pruning the deadweight content for this reason. In the grand scheme of the web, there is not any reason for concern. But, the domain having rogue faceted search URLs will turn hundreds of pages into millions and lead to several unwanted problems, such as:

Duplicate Content

If multiple versions of similar URLs do not have any significant difference in value they offer, they will amount to duplicate pages, but this will be a red flag for Google. It can also lead to a manual penalty that causes dramatic traffic loss or algorithmic penalty, gradually punishing the domains with low value and weak content over time.

This means that even if you have fantastic, high-quality pages that deserve to perform well, the increased share of duplicate URLs surrounding those can be detrimental to your entire website’s performance.

Wasted Crawling Resources